There’s a little lemma used in the Importance Weighted Autoencoder paper [1]

EM algorithm and mixtures of distributions

As a data scientist in the retail banking sector, it is natural that I do a lot of customer segmentations. Recently I was assigned to investigate on what kind of behavior our investment fund customers exhibit online, I used Gaussian mixture models to see what kind of “in between” After the project was concluded I decided to write a blog about Gaussian mixture as I thought the theory was quite interesting.

GAN and better GANs

Really short intro

Given random variable $X$, GAN (Generative Adversarial Nets) aims to learn $P(X)$ via generative methods, specifically, it involves two neural networks playing a minimax game, where one is constantly generating data points that resemble $X ~ P(X)$ to pass it on as a “real” sample, while the other tries to recognize the actual real sample and the generated one, the game goes on until both reach the Nash equalibrium.

Feature engineering - Encoding categorical variables (Part 1)

Categorical variables are discrete variables that take values from a finite set. Examples of some categorical variables are

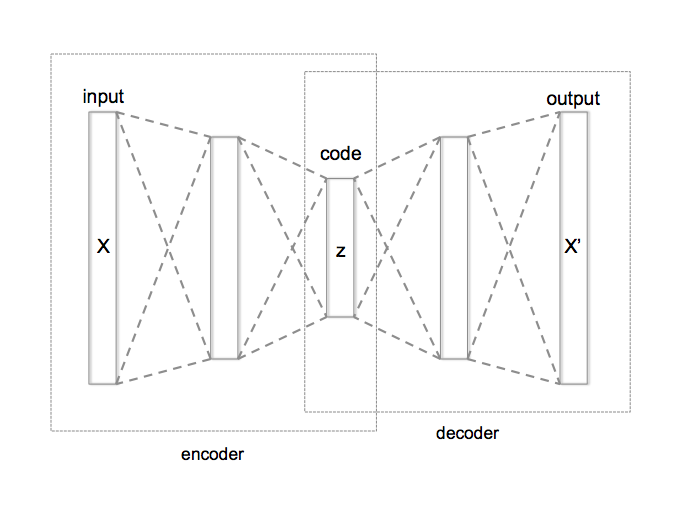

Autoencoder (part 1)

What is an autoencoder

An autoencoder is a deep neural network atchitecture that is used in unsupervised learning, it consists of three parts, an encoder, a decoder and a latent space. The goal of an autoencoder is to encode high dimensional data to a low dimensional subspace by means of feature learning.